Spatial computing is surging in ubiquity. As a result of this surge, there are more AR designers than ever. Many of us have come from 2D screen design, with a litany of patterns and concepts in tow, often seeking to apply these patterns to spatial experiences.

But along with a third spatial dimension to our work come new physical, human complexities to unpack and solve for. Our two-dimensional design patterns are still useful, but cannot possibly account for a user who exists within the design itself. Yet, this is the nature of AR—it is a medium that must respond to the human participant inside of its physical space.

To better understand how to design spatial relationships between people and content in AR, we must begin constructing new patterns.

Interacting with the space around us

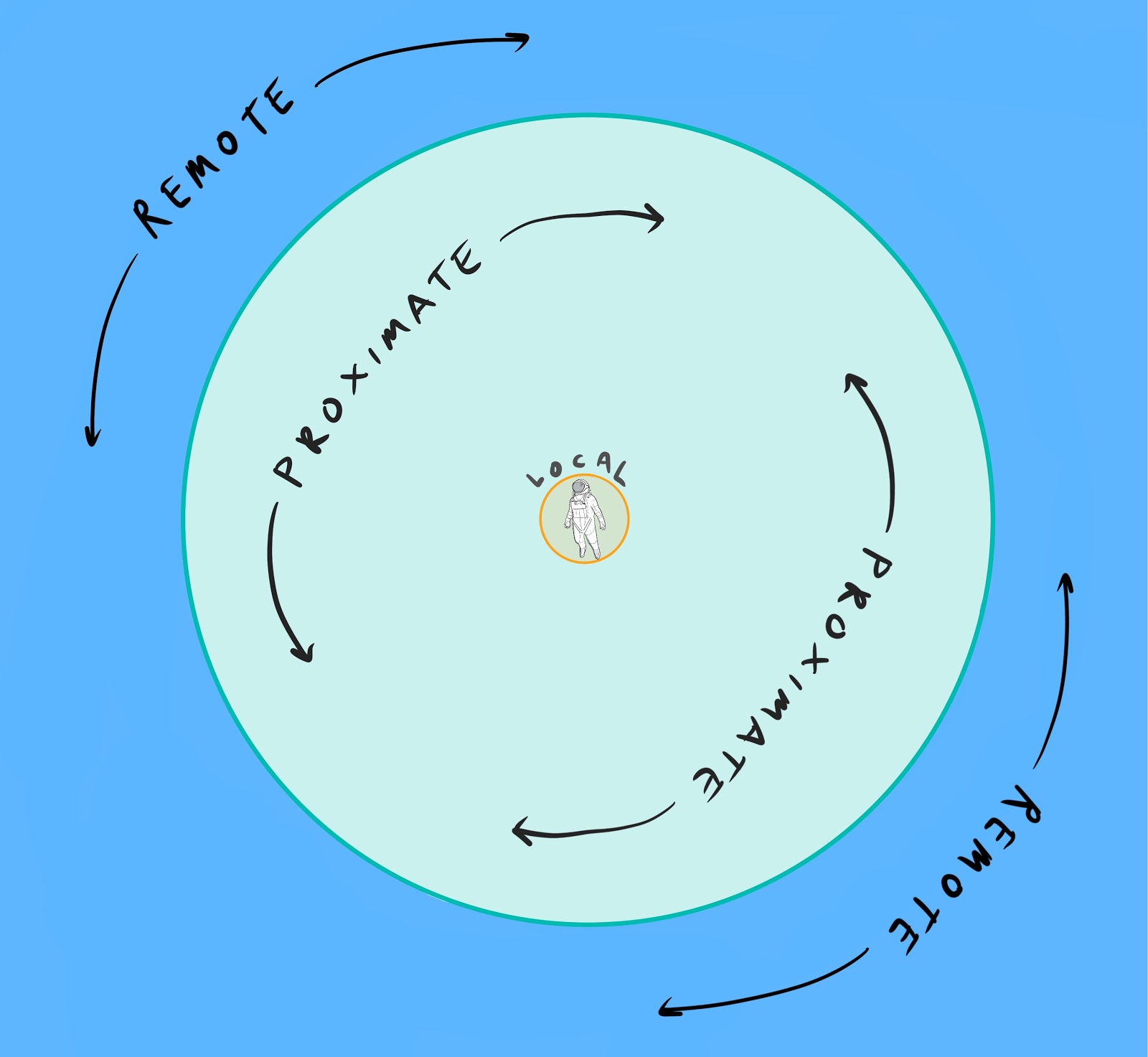

One pattern about which we can begin to reason is interactive space—the regions around the participant where we can engage, draw attention, and provide context.

When we talk about interactive space in AR design, we can consider three primary regions of real-world interaction on which to focus. These are spatial regions we have been reasoning about since the moment we're born. They play a second-nature role in guiding our actions as we move through and interact with the world around us.

In stage or film, we refer to regions of space as fore-, middle-, and background. Yet, these terms restrict us to patterns based on observation. They don’t account for the agency and control participants in XR wield.

Lacking a readymade set of terms to describe interactive space in XR, I’ve landed on three that I feel do the trick quite nicely. Ranging from most interactive to least, they are: local, proximate, and remote.

Local space

Local space is the region that extends from about a minimum of seven centimeters from your face outward to the tips of your fingers. These distances are, respectively, the smallest distance at which human eyes can focus, and the largest distance at which we can touch things.

For obvious reasons, we don't like to interact with objects that sit inside of our focal range. Apart from the blurring effect that happens at this distance, objects this close pose a threat to our eyes. We instinctively avoid any damage to our visual organs, for excellent reasons.

At the far end of this range are our hands—our most valuable tools for interacting with the world. Our hands are impeccable in their refinement—sensitive and strong at the same time. Beyond this, reaching objects that sit beyond our fingertips requires us to spend energy, which means we need to store, then spend calories.

And we like to conserve energy. We like to use efficient tools at an efficient range. This makes local space the most attractive region to our tactile senses. If we don't have to move, advantage human.

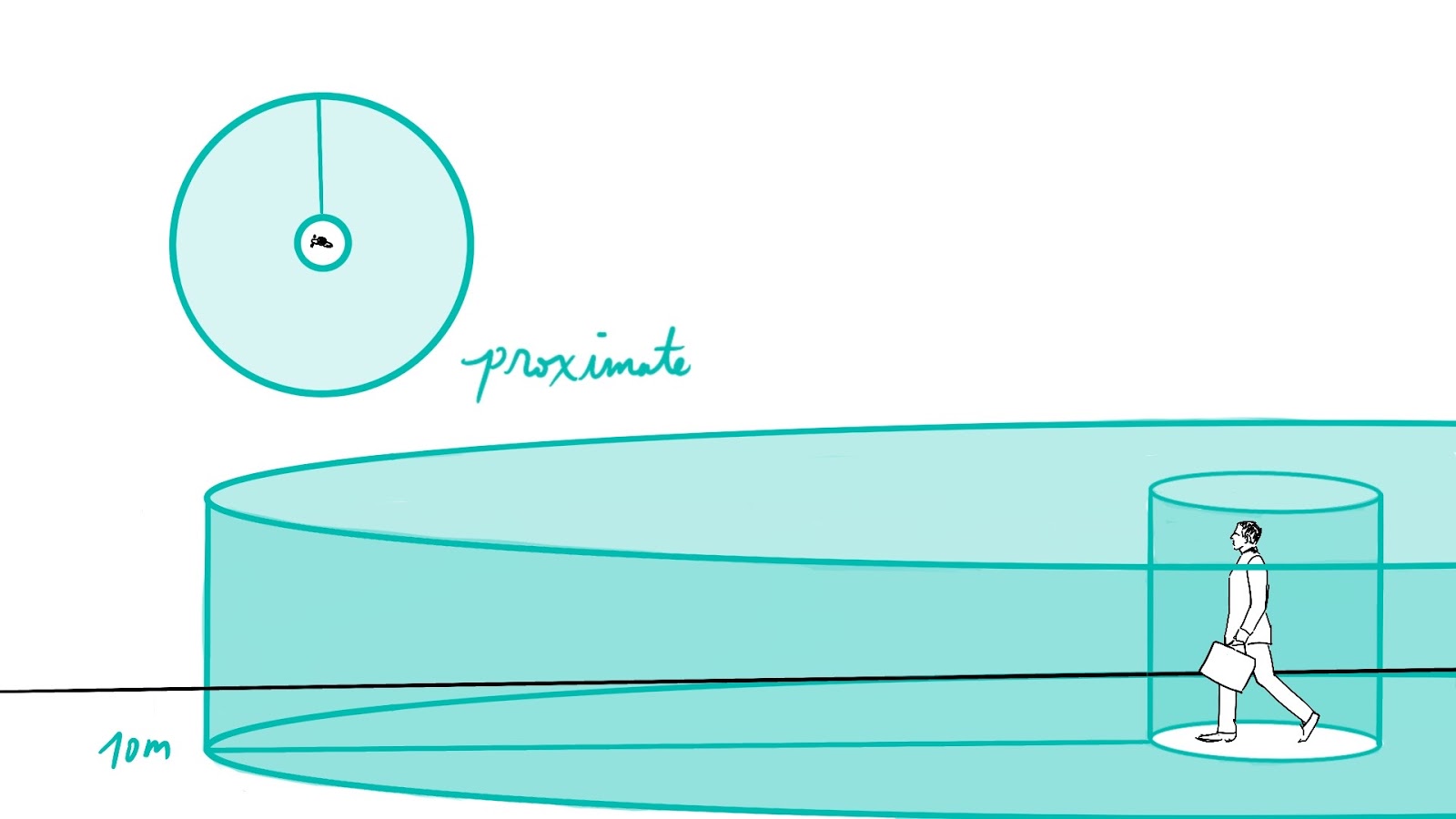

Proximate space

We find proximate space in a tube-shaped volume centered on the participant. The inner radius is the participant’s reach, and the outer radius is about the distance at which binocular convergence begins to weaken, about 10 meters. At this outer distance our ability to perceive depths wanes, making it harder for us to target and track objects of import.

The height of proximate space is the participant’s overhead reach—since we cannot ascend vertically in space without mechanical intervention.

Interacting with objects in this space requires more energy than we need for local space. Yet, our cosmically-young DNA (a mere 300,000 or so years old) is still highly-motivated to investigate and interact with objects within proximate space–after all, our ancestors flourished as hunter-gatherers.

Proximate objects do not demand a huge amount of energy to attain. An able-bodied, full-sized adult can cover between 67 and 78 centimeters per step, making proximate objects between one and about 13 steps away. For such people this distance is not terribly large.

Within this 10-meter region of space, able eyes work at full capacity, taking advantage of binocular and monocular cues for judging depth. As a result, we have a keen level of certainty about the position of proximate objects in this space. This makes proximate objects wonderful candidates for spurring movement in an interaction–we are able to make quick, reasonable assumptions about the cost of arriving at such objects.

Remote space

Remote space is the region beyond binocular convergence—10 meters or greater. At this range, we run out of binocular cues to provide us with absolute distance information. In other words, we are less sure how far away things lie in remote space.

This uncertainty makes predicting the energy expenditure required to reach an object more difficult. This, in turn, precipitates a natural decrease in our motivation to arrive at remote objects.

As objects recede further into remote space, they take up fewer degrees of arc in our field of view–more information is packed into a smaller space. This makes remote content harder to track and target. At this remote range, our eyes strain a bit more. The fine motor control of our hands becomes less accurate and harder to use.

This makes it more difficult for us to target and track objects in remote space. This is true whether with our eyes or with a remote pointer like you'd find with a Magic Leap headset. This increase in difficulty creates a decrease in motivation to engage with objects in remote space as well as an increase in frustration.

Again, our biology prefers we do less and get more.

Using interactive space in your AR experiences

So, how can we apply what we know about interactive space to our AR experiences? Let's take a look at the three regions again, this time through the lens of augmented reality content.

Local content: engagement at arm’s reach

By placing content in the participant's local space, you ask for engagement. From babe to elder, we love to reach out and touch things at this range. This interactive region is perfect for any and all content that needs direct manipulation such as pressable UI, photospheres, and product pickers.

Local content is primed for interaction. Because it sits close to the participant’s fingertips, it makes sense to use local content with touch/select interactions. In doing so, you will leverage the participant’s natural curiosity and activate key story elements that help drive your narrative.

Content in local space is great for controlling an experience—think of the dashboard of a car or airplane. At this close distance, instrumentation for operating the rest of an experience is easy to reach, target, and use. Keep high-action items that drive your experience close to the participant.

Proximate content: guiding the participant through space

Proximate content captures attention, but sits out of reach, creating a sense of attraction. With an appropriate design, proximate content can function as the lifeblood of a narrative experience. It has the ability to pull the participant through space toward interaction.

Content in this region is also very valuable in a non-interactive state. Directional UI, motion cues, and narrative hints do not need to be touchable, gaze-enabled, or have proximity triggers to be useful. But, given our motivation to investigate proximate space, these elements are more relevant to the participant.

Whereas in the real world we need to approach objects in proximate space for them to be attainable, this is not so in AR. Occupying a significant arc in our field of view, and being trackable in stereo, proximate content is primed for gaze interaction.

With this in mind, you can think of proximate content as an attractor. Use it to pull the participant in the direction of your narrative. Engage the participant with gaze triggers to bring content at this range to life in a way that supersedes reality to enhance the narrative.

Remote content: atmospheric engagement for your experiences

Remote content is best used for creating atmosphere and high-level waypoints in your experience. Sitting beyond binocular convergence, it is harder for the participant to localize this content. This diminishes its relevance to the experience narrative from a tactile standpoint.

In spite of this, remote content can be used in a similar way as proximate content—to draw the participant to a new local space. Be considerate, however, about how you use content in remote space. Unless you are creating an experience meant to be carried out over large distances, remote content will be harder for users to engage with. Unlike in VR, AR doesn’t make heavy use of teleportation, since the real world is our backdrop (and we can’t actually teleport).

When you want your remote content to be directly interactive, however, gaze is a great choice for your interaction trigger. In spite of not being able to touch objects in remote space, we are fond of looking at them. Again, in AR, unlike in real life, we get to use our eyes like very long, invisible, fingers, which is quite engaging. Use that to bring your remote content into play.

But, keep in mind one significant challenge to remote content—interference from the real world. Unlike with VR, in which we create and control the entire environment, AR uses the real environment as its setting. Because of this, the real world will already serve as a kind of remote content, competing for relevance with digital artifacts in that space.

Using interactive space in storytelling

AR presents designers coming from the 2D world with a significant and new challenge. Until recently, we’ve only had to consider the user in the equation as an external participant of the designed artifact. In spite of there being interaction to solve for, screen design never incorporates the physical user into the design itself.

The challenge we face now is making sense of the space around the incorporated human participant, since their presence is a part of the spatial design of an AR experience. As they move, their position changes the spatial relationship they have with the content in an experience. Thus does the experience also change with each new perspective.

Beginning to reason about how we interact with space, in general, is critical. By breaking space into interactive regions, we can begin to define interaction from the human outward, and begin designing better AR experiences.

---

If you enjoyed this read, share it! I’ll also be speaking about responsive AR design at Augmented World Expo EU in Munich on October 17 of this year. I’d love to chat more with you about AR and other challenges we face as designers in an emergent medium. If you’re at AWE next month, let’s connect!

Otherwise, you can always find me on the Torch Friends Slack channel, and Twitter, where I exist as @blackmaas.