Note: This is our first blog for 3D app developers. Over the last year we’ve published more than forty posts to inspire designers and to share our insights into the fast evolving 3D market. Building Torch AR, a complex, multi-user, interactive mobile AR app has taught us some important lessons that can help you further your own augmented reality projects. Stay tuned for more posts that explain how we built Torch and share the useful tools and tricks we’ve learned in the world of 3D app dev. - Josh

Torch + Godot

At Torch we are building an app that allows anyone to design, prototype, test, and soon deploy mobile AR apps on their phone or tablet (currently iOS/ARKit only). We use Godot, an open source game engine, to handle the majority of the spatial work. Godot is great and we are glad we chose it. Among the many reasons we like Godot: it’s lightweight, it’s free to use, the Godot community is a pleasure to work with–the fact we can modify it to fit our needs is pretty high up there.

After all, we are building one of the first, and certainly among the most complex, multi-user, multi-scene, interactive augmented reality apps around. We are bound to find things we need to do that Godot doesn’t support. And since Godot is open source, we can just add the features we need. Simple!

Making rendered objects more believable with shadows

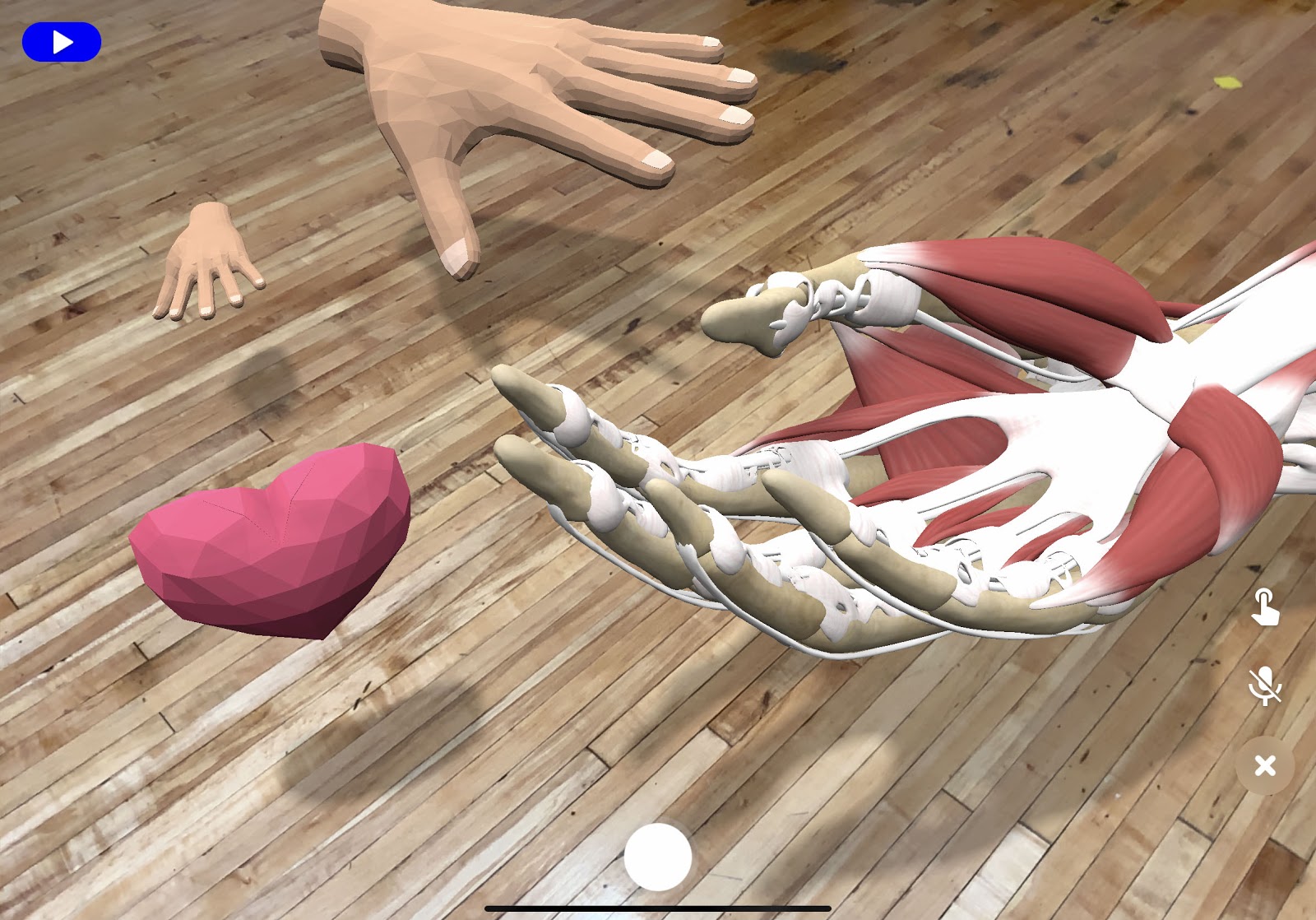

Torch, at its most basic, is a tool that allows a designer to place objects in a 3D space superimposed on the camera feed, all without code. It’s a WYSIWYG editor for 3D. As a design tool, attention to detail is important. To make the virtual objects feel more believable next to real-world objects, we need to render shadows. It turned out to be a bit more involved than we initially thought, so after we figured out how to do it, we decided we’d share the solution in case other Godot users run into a similar problem.

We currently use ARKit’s plane detection to figure out what geometry to cast shadows on. Once we know where to cast shadows, we need to actually cast them. The easiest way to accomplish this is a transparent material that receives shadows, like the ShadowMaterial in ThreeJS. Godot doesn't provide this out of the box, so we need to figure out how to do it.

For every pixel on a detected plane we need to find out if it is shadowed by a virtual object. If it is, we render that pixel with a shadow. If not we skip it. Godot provides a ShaderMaterial which allows developers to write their own shaders. This seems like the way to go!

Looking at the shading language doc, it appears that there are three spots to hook custom code into: “vertex processor” which computes the vertex position, “fragment processor” which computes the albedo and alpha of a fragment, and “light processor” which computes the lighting for a fragment. So we want to write a "light processor" which checks the shadow and sets the alpha accordingly.

Alas, the light processor cannot modify the alpha of the pixel. Let's change this! To do that, we need to learn a bit more about Godot's rendering setup. The key sentence in this document for us is: "Shaders are, then, translated to native language (real GLSL) and fitted inside the engine's main shader." We need to modify the engine's main shader to allow us to modify the alpha in the light processor. Digging around a bit, we find the main shader, and it is named "scene.glsl" for the GLES3 backend. This is an uber shader which is basically a large shader with conditional blocks of code which are turned on and off to produce different shaders with shared code. Looking through this large shader, we see a LIGHT_SHADER_CODE tag here. That looks like where our light processor code will be injected.

Now we know where the code is injected, but what actually puts our light processor into the scene shader? Poking around the engine source code a bit, you can find this happens in two spots in shader_gles3.cpp. First, it looks for LIGHT_SHADER_CODE in the shader here, then it inserts the light code from the material (SpatialMaterial or ShaderMaterial) here.

Having just established an understanding about how all the pieces involved fit together, up next we simply need to make the changes needed to modify alpha in the light processor. In the scene.glsl shader, the light processor (via the LIGHT_SHADER_CODE tag) is contained in a GLSL function named "light_compute". We need to be able to modify the final alpha in this function somehow. Looking at how this function is called, it seems the easiest way is to pass the current alpha in as an in/out parameter. The steps to accomplish this are:

- Modify light compute.

- Modify callers to light compute. You can see the code for these modifications on my Github repo here.

After doing this, we still don't seem to be able to modify the alpha via the ALPHA variable the way you can in the fragment shader. It turns out we need to add it to servers/visual/shader_types.cpp so that the Godot shader compiler will rename “ALPHA” to “alpha” in the GLSL code. This is also used for the shader editor in the editing environment.

After all of this, we can finally write our custom ShaderMaterial that looks like this:

shader_type spatial;

// These flags affect the #defines that are used in the GLES3 scene uber shader. blend_mix causes the material to get shadows

render_mode blend_mix,cull_back;

void fragment() {

ALBEDO = vec3(0.0, 0.0, 0.0); // Black shadows

ALPHA = 0.5; // Make sure Godot sends this through the transparent rendering path.

}

void light() {

// Take the attenuation (shadow strength) and use it to manipulate the alpha

// 0.75 is there to let some of the camera feed through.

ALPHA = clamp(1.0 - length(ATTENUATION), 0.0, 1.0) * 0.75;

}

I have a Godot project here that already has this shader setup in a simple scene.

What have we learned after making this modification?

- Godot splits the fragment shader into the fragment and light pieces, and the light piece is run per light. Pretty nice design!

- The main shader that Godot uses is an uber shader with lots of #defines which change how the shader is compiled.

- SpatialMaterial and ShaderMaterial flags/options mostly map to #defines in scene.glsl.

- Adding variables to a Godot shader involves modifying shader_types so the shader compiler knows about the variable.

What next?

Thanks for reading, I hope this has helped improve your understanding of the Godot rendering system a bit! If you want to see how Godot renders shadows in an AR context checkout Torch, our mobile AR prototyping tool. Developers are one of our fastest growing user groups. We’ve been told this is because they are using it to communicate concepts with designers and others on their team instead of using things like drawings and hand gestures but hopefully we are inspiring others to try their hand at building mobile AR applications.

If you are interested in learning more about how to develop mobile AR applications or have questions about my post, join us in our Torch Friends slack channel where the engineering team has been known to lurk. Torch Friends slack is a good place to keep on top of changes we plan to commit back to the Godot project in the coming months.

.png)